Do AIs Need Bodies?

At the very least it seems they need to understand ours

The body-mind conundrum has been the subject of much philosophizing ever since the first armchairs were built. And now, it is our turn to grapple with it. From a critical reader of last month's issue:

Wouldn't AGI machines need some kind of physical time-space conception of themselves as existing in a particular place at a particular moment in order to relate and refer to and be aware of both themselves and the world and other thinking things?

AGI is Artificial General Intelligence, and the holy grail of many ambitious AI researchers: an AI system that can learn and perform any intellectual task that a human being can1. The proposition is that, if we assume self-consciousness is needed for AGI, the AI system has to associate itself with a body, and similarly understand other entities (e.g., people) as embodied individuals.

Let's discuss an argument that supports this proposition. We will then consider how much of it still holds for merely General Intelligence (rather than self-consciousness).

Bodies and Self-Consciousness

Self-consciousness entails knowing one's own feelings and desires in a first-person way2. It also entails forming intentions as a free agent. We saw in the previous issue that all this depends on learning a language that can refer to these features, even if it were just a matter of thinking privately in that language. Such a language is learnt by observing other people's behavior and use of the language3. BUT in order to do so, we need the capability of identifying individual persons in space and time.

For example, if we were to observe Tom is sad, we need to first identify "Tom". And this is done both in location and time. We need to select Tom as e.g. the person associated with this human body to my right, and remember him e.g. he is the same person I saw yesterday whose body then did not look sad. Only then do we have a chance of truly learning the word “sad”. Therefore there needs be an understanding of enduring individual bodies in the physical world to learn the applicable language for self-consciousness.

Such is the argument of the philosopher P.F. Strawson in his 1959 book Individuals4. He addresses the body-mind conundrum by claiming that the concept of a person, which includes both body and mind, is somewhat axiomatic and the frame in which such questions arise5.

Bodies and General Intelligence

If we drop the assumption that self-consciousness is necessary to reach AGI, perhaps the AI system can do without a body. But would it still need an understanding of embodiment?

Consider the biggest and baddest AI systems currently in vogue—Large Language Models like ChatGPT. These do amazingly well in answering questions posed them, to the extent that Google is afraid they will encroach on their search business6. As good as these systems are, an exhaustive but fair critique of them by Yoav Goldberg7 points out that they lack the key conceptions of events and time. If we squint hard enough at these criticisms, this look like a version of the body-mind problem:

In their training, the models consume text either as one large stream, or as independent pieces of information. They may pick up patterns of commonalities in the text, but they have no notion of how the texts relate to "events" in the real world. In particular, if a model is trained on multiple news stories about the same event, it has no way of knowing that these texts all describe the same thing, and it cannot differentiate it from several texts describing similar but unrelated events...

...the model doesn't have a notion of which events follow other events in their training stream. ... it cannot understand the flow of time in the sense that if it reads that "Obama is the current President of the United States" and yet in [another] text that "Obama is no longer the President", which of these things follow one another, and what is true now.

In this case, the trouble is with identifying and re-identifying events rather than persons. As with events, the concepts of time and space8 matter for making sense of bodies. So one can posit that an understanding of embodiment would be sufficient for addressing the issues Goldberg identifies. Though maybe only a simpler, more limited form of that understanding is necessary.

I don't have a solution here, but can point to a research direction that might help with one of the issues: the issue of identifying and re-identifying events. In their paper Memorizing Transformers9, published at the ICLR 2022 conference, Google researchers presented a way to extend language models via external memory that can be looked up efficiently. One can envisage a way to store "events" in this external memory, and update this store with new information on each event as it comes in, after checking which information belongs to which event if any10. In this way, multiple references to the same event do not confuse the model, and it has one place to refer to when queries about the event come up.

Artificial Self-Consciousness

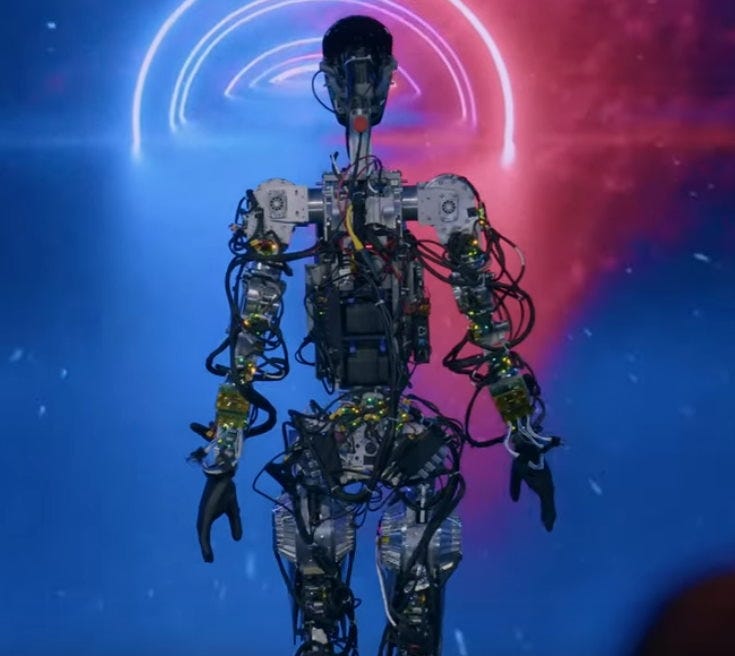

Suppose we do solve the problems of AI systems not understanding space and time, and give them the ability to identify and refer to unique events; suppose we solve the other issues that Goldberg brought up too. But we don't give the AI a body, and that it continues running out of a datacenter as pictured above. What then? Might it still "wake-up" into self-consciousness?

If it does, it will associate itself with said datacenter. Which looks nothing like the human body, and cannot smile or frown, laugh or groan, exult or sulk. The self-conscious AI will then have an utterly alien makeup of expressible "emotions". Perhaps a makeup comprehensible to other AI entities in similar datacenters, but not to us.

If a lion could speak, we couldn't understand him.

—Ludwig Wittgenstein11

Thanks to Jordan Scott for reading a draft of the previous issue and sparking some ideas for the current issue.

From the Wikipedia article, which discusses this and associated terms such as weak AI, strong AI, and Artificial Narrow Intelligence (good at one or a few tasks only).

No consensus definition here. Wikipedia approximates my definition here closest with the term self-awareness. Though it does not include free agency or first-person privilege. See last month’s issue of this newsletter Even AIs Need Community, Dec 2022, for further discussion.

We discussed this in last month’s issue, through Ludwig Wittgenstein’s famous private-language argument.

See Francis Alakkalkunnel and Christian Kanzian’s summary: Strawson's Concept of Person – A Critical Discussion

from ibid.: “the concept of a person is the concept of a type of entity, such that both predicates ascribing states of consciousness and predicates ascribing corporeal characteristics, a physical situation & co. are equally applicable to a single individual of that single type”

See his github post. Excerpts quoted have been edited lightly for spelling, punctuation and clarity.

While Goldberg did not mention space in his remarks, we can easily extend his comments on time to space. Just like how AI systems cannot understand which event came earlier in time, they cannot understand which location is, say, further south. For example, if an AI system is trained on text saying that Mexico is to the south of the United States, and another text that the United States is to the south of Canada, it would still not understand that Mexico is to the south of Canada (because it was never presented with that text).

Memorizing Transformers. Wu et al. Mar 2022. https://arxiv.org/abs/2203.08913

There are known mechanisms for this matching—the transformer architecture used in modern neural networks use key, query and value matrices to implement attention, to match knowledge relevant to the input. See the Illustrated Transformer for a good explanation.