Judgment Need Issue From Human Lips

AI can help surface evidence and legal argument, but cannot decide in our stead

Dario Amodei, CEO of Anthropic, recently expressed his optimism in having AI "improve our legal and judicial system by making decisions and processes more impartial"1. My cofounder and I2 share in this optimism, but not because AI can be impartial. Where it counts, humans need necessarily do the judging. Let me explain.

Amodei's focus is on AI serving as a correction to human frailties—bias, favoritism, and arbitrariness in interpreting legal criteria. We focus on the more mundane but sad fact of inadequate lawyering. What? Are we saying that there are too few lawyers in the world? Well, yes. The vast majority of litigants cannot afford and do not receive enough lawyer attention.

Difficult knowledge work is needed to

discover and review the pertinent documents,

depose the relevant witnesses,

research the applicable precedent case law, and

form and write up the strongest arguments.

We believe AI can and will address this shortfall in available intellectual labor.

By helping surface all the factors that impinge on the outcome of the case, AI will make a meaningful impact on the delivery of justice. Legal outcomes will align with the facts of the case, rather than the quantity—and quality—of the lawyers on either side. AI need not replace judges or jurors. AI can simply help make humans' decisions best-informed.

And decide humans alone can. At least in matters of legal or moral consequence. Let's see why.

Fellow Citizens

The law is what we have agreed as a democracy to abide by. This is almost axiomatic. What is less appreciated is that the legal code needs a fleshed-out judicial system around it. We need courts-of-law to interpret and apply the code, resolve conflicts in the code, and perhaps above all, to show that the law does rule in the land and rule fairly. The latter is why trials are open to the public, and court proceedings are published.

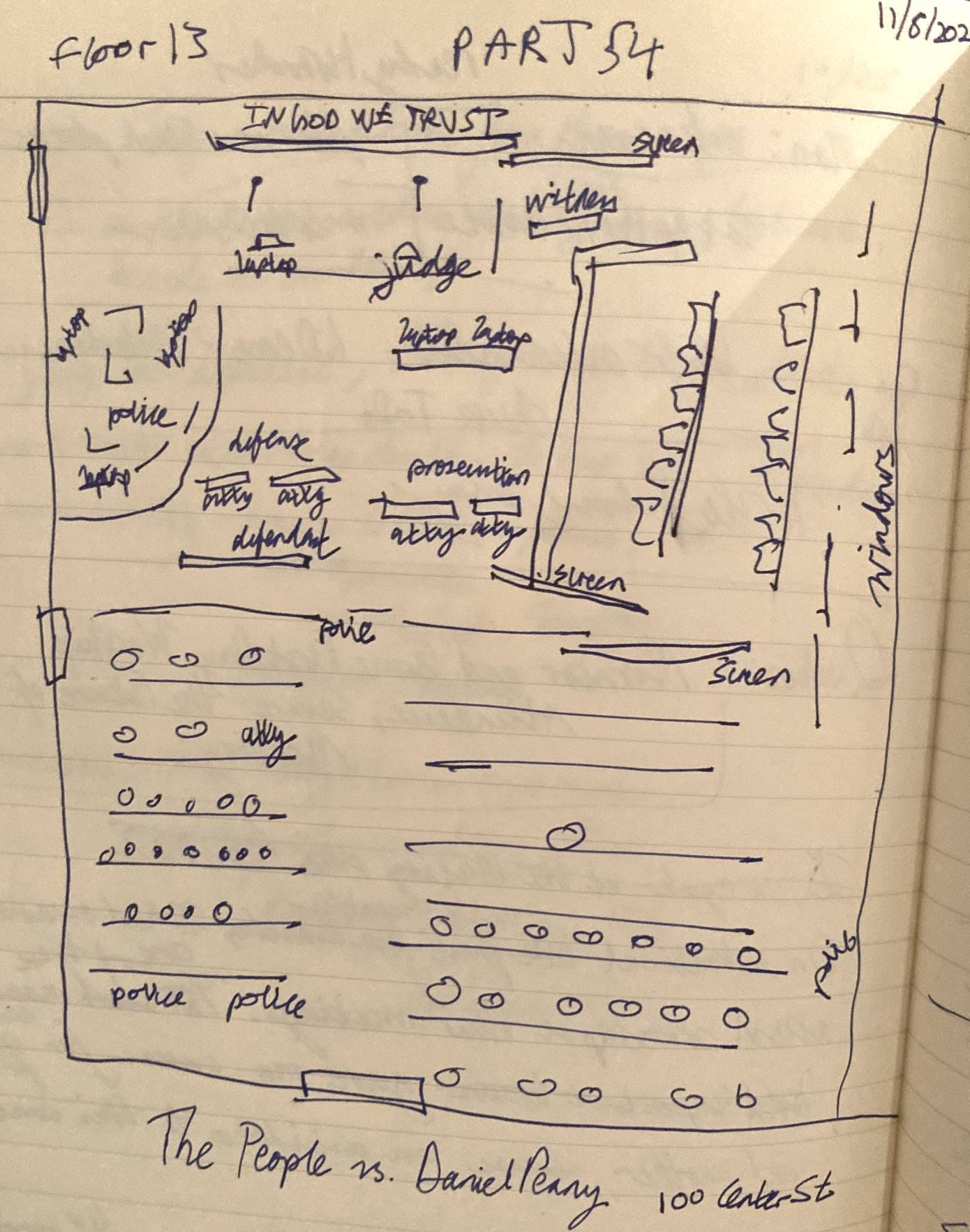

Criminal cases are always titled The People vs. [Defendant]. The conviction and sentencing of a citizen is the polity ('The People') casting judgment and meting punishment on one of its own. This requires a moral heft that AI can not provide. Even if a speeding ticket was issued automatically to a driver caught on camera, the driver is going to metaphorically shake his fists at the town council which decided to install the camera, not the camera itself.

This need to pin an issue of judgment on named persons occurs in other contexts too. We have doctors sign off on medical exam results; accountants on tax audits; civil engineers on structural analyses. Because with judgment comes its twin: accountability. We need someone to stand behind a judgment call. And this someone need be, in Immanuel Kant's language, a rational being who is "a legislating member of the kingdom of ends"3.

Why? Because only we get it. Each of us has experienced pain and pleasure, loss and gain—both the physical and psychological kinds. Our lived experience informs us of what it's like4 to be imprisoned, or have a medical misdiagnosis; even if we haven't experienced these directly. This gives us standing to make moral judgments5. Secondly, as interdependent members of civil society, we are suitable addressees of praise and blame. And so, we have skin in the game, i.e. accountability.

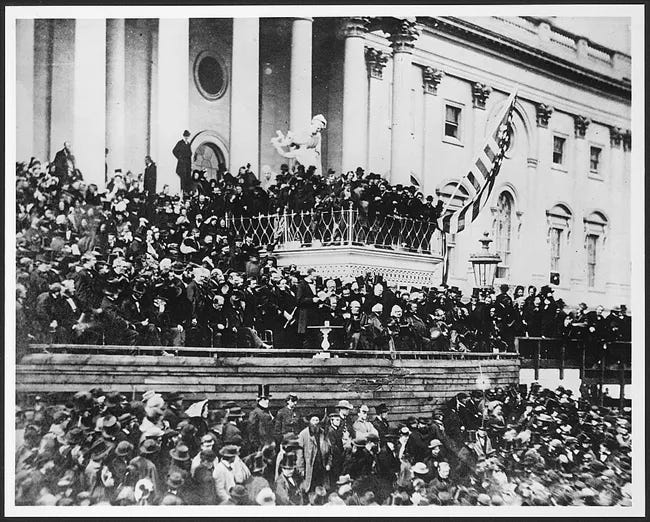

Current AI does not meet this membership standard. The only AI we have envisioned that fit the bill are science-fictional, like the replicants in Blade Runner, or Commander Data from Star Trek. I suspect that if and when we get there, such AI entities will also be subject to said human frailties—bias, favoritism, and arbitrariness. Because they arise from the same lived experience that grant the standing to judge. In issues of judgment, there is no avoiding moral culpability. Albeit in a very different context, Abraham Lincoln says it best:

“Fellow citizens, we cannot escape history. We of this congress and this administration will be remembered in spite of ourselves. No personal significance or insignificance can spare one or another of us. The fiery trial through which we pass will light us down in honor or dishonor to the latest generation. We, even we here, hold the power and bear the responsibility.”

-Abraham Lincoln. Annual Message to Congress. Dec 1, 1862.

Dario Amodei. Machines of Loving Grace - How AI Could Transform the World for the Better. Oct 2024. https://darioamodei.com/machines-of-loving-grace

Colit AI - AI-assisted Legal Argument. https://www.colit.ai

This is from the third formulation of Kant's Categorical Imperative. Immanuel Kant, Critique of Practical Reason. 1788. Commentary.

This what it's like is referred to as 'phenomenal consciousness' in the academic literature. See Butlin, Long et al., Consciousness in Artificial Intelligence: Insights from the Science of Consciousness. Aug 2023. https://arxiv.org/abs/2308.08708

"Moral judgment" does not sound like an everyday occurrence. Nor does jury deliberation. But consider: When a 911 call is made, the dispatcher has to decide if the situation merits a police visit. If the police visit, they have to decide whether to make an arrest. If an arrest is made, the DA's office has to decide whether to prosecute. If a prosecution is begun, the public defender has to decide whether to recommend to the defendant a plea deal. I would argue that each of these decisions has a moral element that calls for human judgment.